Building a Python-based chatbot project for deep learning can be an exciting and challenging task. Here are the general steps to get started:

- Define the scope and purpose of your chatbot project. Consider what kind of chatbot you want to build and what specific tasks it will be able to perform. Will it be a customer support chatbot, a personal assistant, or a chatbot for gaming? This will help you determine the necessary features and functionalities.

- Choose the deep learning framework you want to work with. Some popular deep learning frameworks include TensorFlow, Keras, PyTorch, and Caffe. Each framework has its strengths and weaknesses, so choose the one that aligns with your project requirements.

- Collect and preprocess data. To train your chatbot, you will need a dataset that includes questions, answers, and conversations. You can collect data from various sources, such as customer support chat logs, social media, and online forums. Once you have the data, you will need to preprocess it by cleaning and normalizing the text.

- Train your model. You will need to choose a deep learning architecture for your chatbot and train it using your preprocessed data. Depending on the complexity of your model and the size of your dataset, training may take several hours or even days.

- Test and evaluate your model. After training your chatbot, you will need to evaluate its performance by testing it on new data. You can use metrics such as accuracy, precision, recall, and F1 score to measure the effectiveness of your chatbot.

- Integrate your chatbot. Once your chatbot is trained and tested, you will need to integrate it into your desired platform or application. This may involve setting up a web interface or API to communicate with your chatbot.

- Continuously improve your chatbot. To ensure that your chatbot is performing optimally, you will need to monitor its performance and continuously improve it based on user feedback.

Overall, building a Python-based chatbot project for deep learning requires a solid understanding of deep learning, natural language processing, and software development. You can find many resources online, such as tutorials, documentation, and open-source projects, to help you get started.

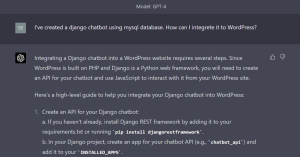

import nltk, random, json , pickle

This line of code is importing the necessary libraries for building a chatbot using natural language processing techniques.

nltkis the Natural Language Toolkit, which provides tools and resources for processing and analyzing natural language data.randomis a built-in Python module that allows for generating random numbers, useful for certain chatbot functionalities.jsonis a built-in Python module for working with JSON (JavaScript Object Notation) data, which is commonly used for storing and exchanging data in web applications.pickleis a built-in Python module for serializing and de-serializing Python objects, useful for saving and loading trained models.

These libraries can be used to create a chatbot that can understand and respond to user input in natural language. The code may continue with additional code for loading and processing a dataset, training a machine learning model, and creating a chatbot interface.

from nltk.stem import WordNetLemmatizer

from nltk.tokenize import word_tokenize

import numpy as np

from tensorflow.keras.models import load_model

from sklearn.feature_extraction.text import CountVectorizerThis line of code imports additional libraries that are commonly used in natural language processing tasks:

WordNetLemmatizeris a tool from NLTK that can be used to lemmatize words, which means reducing words to their base or dictionary form. This can help with standardizing words and reducing the number of unique words in a dataset.word_tokenizeis another tool from NLTK that can be used to tokenize text, which means splitting text into individual words or tokens. This can be useful for creating feature vectors from text data.numpyis a Python library for numerical computing, which can be useful for creating and manipulating arrays of numerical data.tensorflow.keras.modelsprovides tools for building and training deep learning models in Keras, a popular Python library for machine learning.sklearn.feature_extraction.text.CountVectorizeris a tool from scikit-learn that can be used to convert text data into numerical feature vectors, which can be used as input to machine learning models.

These libraries can be used to preprocess text data, create feature vectors, and load a pre-trained deep learning model for use in a chatbot. The code may continue with additional code for defining functions to process user input and generate chatbot responses.